Virtualization is the new buzz word in computing industry. Even though a normal customer of support engineer is exposed to this term recently, for the past few years it is one of the favorite word for the IT division of a corporate company.

Even if you are not much familiar with the typical concepts of data center virtualization, I am sure you have come across the ideas of virtualization through the use of soft wares like Virtual box, VMware workstation and Microsoft Virtual Machine. Yes the same software you are using to run multiple OS on your system is also the basic concept behind the corporate level server virtualization.

Let us try to discuss some basic aspects of data center virtualization and how it is different from your typical toys used for running virtual machines.

For any large organization which uses large number of computers , there are so many issues associated with these systems. In my personal experience most of the company management treat the IT division as an evil that eating out the big chunk of revenues of the company . The computer as well as the software installed on it demands constant upgradation process and the life span of a typical technology is relatively small forcing the companies to purchase new systems and peripherals almost yearly. In addition to that the power consumption of the system as well as the problems created with e-waste and the environment issues associated with this systems are also a big issue.

To deal with such issues most of the companies now care about the power management aspects by implementing green PC standards , use of LCDs instead if CRTs , and using the CPU throttling and similar power management aspects to cut down the power consumption of the systems .

But all these options for power reduction and energy saving will not be feasible for the datacenters of your company. Datacenter is the place where you keep all your servers in a secure and temperature controlled room. ( now a days it is more fashionable to say data center than calling it a server room )

These servers on the datacenter manages the data flow and companies expect the these servers must have an availability level of almost 99.999 % . An average server with raid controllers and multiple hard disk s consumes more than 1 KW of electricity and typical data centers has hundreds of servers operating 24 hours a day 7 days a week . We cannot implement any kind of power management aspects on these servers as the customers need to get the full performance of these server all the time .

The secondary issue is that all these machines converts portion of the power it consumes to thermal energy and naturally heats up the data center . So we have to use high power air conditioners to maintain the temperature of the data center.

So it means that the server consumes power and then additional power is consumed to cope up with the temperature management . So even though we are not really thinking about it , the corporate data centers are one of the main culprits for global warming 🙂

Next issue is that the cable management, server installation , disaster management system , all these things adds up to the total implementation and running cost of the data center. So we cannot blame your finance manager if he things IT division is the biggest financial burdens of the company .

The next big issue is that all these servers for which the company spend so much money and effort will not utilize even the 10 percent of its efficiency. i.e. it is just like the case of a highly paid staff in your company who sits idle for 7 hours our of his 8 hour work time . Any management will not tolerate such an employee but the same company maintains these lazy servers in the comfort of the air conditioned room .

Data center virtualization is the answer to almost all the issues mentioned above .

We can also call it server virtualization . i.e. we are dividing a physical server to many virtual servers and run server operating system on each of these virtual servers .

The basic idea behind such virtual server is not really new. IBM mainframes implemented the basic version of this idea in early 60’s . Microsoft used this concepts in their 1992 release of windows NT server to run virtual DOS machines and they are currently using the virtualization idea to give compatibility mode for XP applications in windows 7 . But when this idea is developed further to meet the needs of a datacenter management rather than individual desktops , we call it as Data center virtualization .

Energy saving is not the only area where data center virtualization shows its magic. By downsizing the 100 or more physical servers in to 6 ot 7 physical machines , it saves the effort and cost of cabling and the investment of switching and routing equipment . Now a days even the hardware concepts like switching and routing are virtualized making the life of an IT admin more easier .

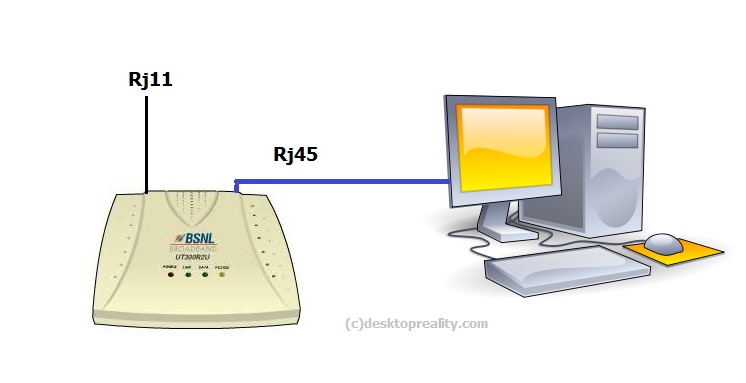

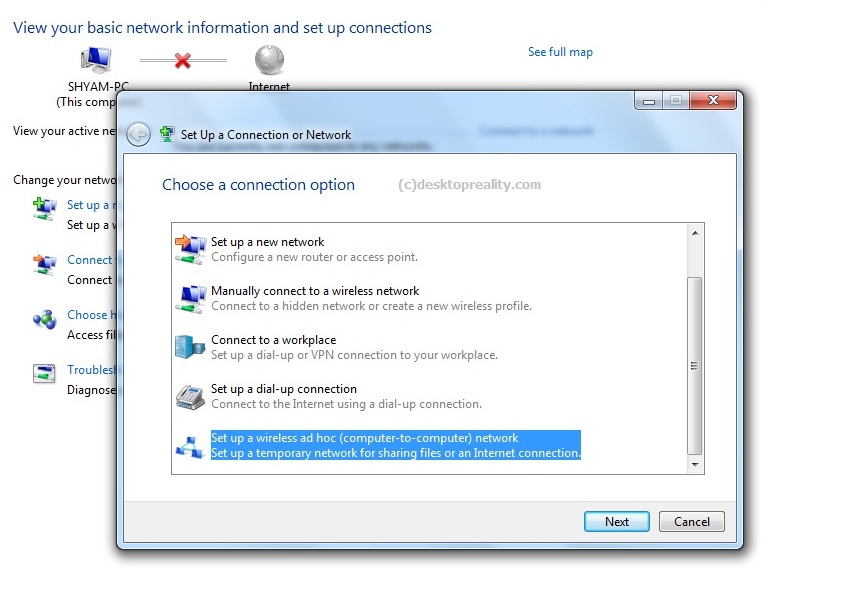

Now let us check how the concept of data center virtualization is implemented . In a typical physical machine the operating system isn installed on top of your microprocessor and on top of the operatring system , the application software is installed . .

This basic model is changed in virtualization model. Rather than the concept of an operating system on top of a hardware , a layer comes in between your real hardware and operating system.

That means on top of the hardware a virtualization layer is installed . We can call this layer as hyper-visor . On top of this hyper visor layer you can create as many virtual computers as you need. Each of these virtual machines has their own virtual processor , virtual memory , virtual hard disk and virtual networking . The operating systems running on top of these virtual machines does not has a clue that they are running on a virtual environment . That means you are virtualizing the hardware and not the software .

Image Copyright (c) vmware.com

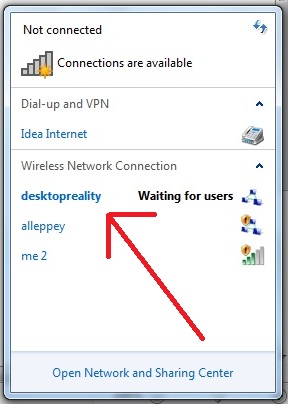

Each of these virtual machines along with the operating systems and application software are a mere collection of files in real world. So that means for creating your next virtual machine using these files you need only few minutes .

If your companies Finance division needs a server for their new project , for the conventional physical machine approach will few days to order new machine , install the operating system , cable it and do the testing before it is handed over to their use . Now all you need is few mouse clicks to make the new server up an running and that too in just few minutes . If you have a remote access to your data center on your smart phone , you can do this process virtually from any part of the world.

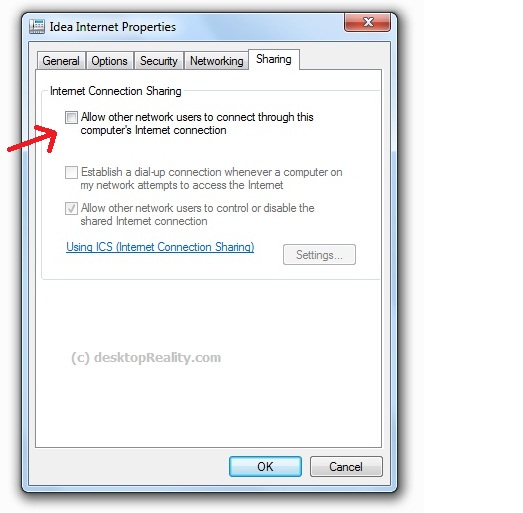

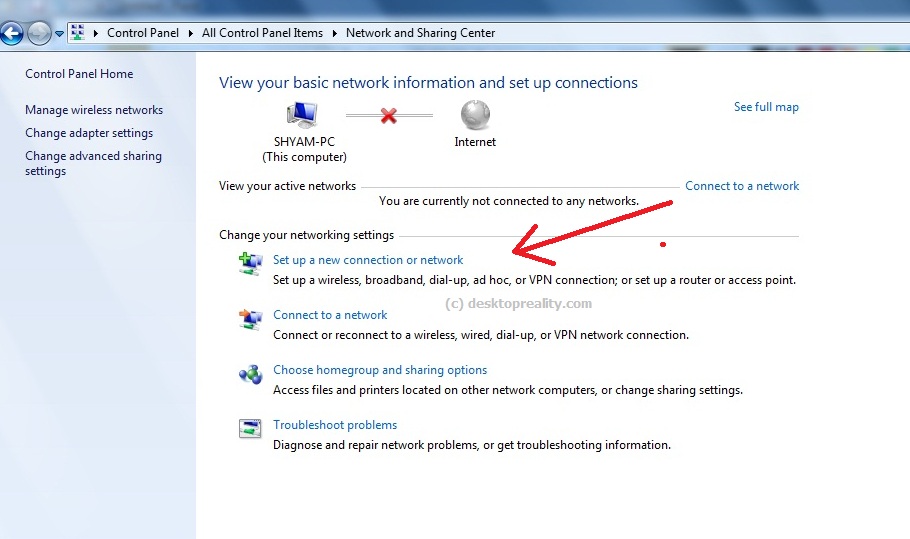

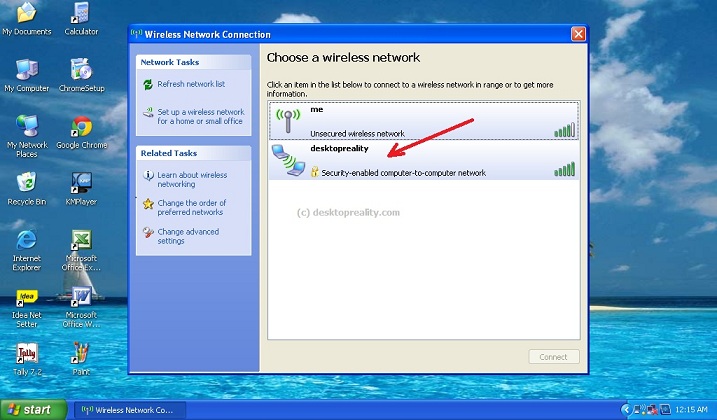

There are two kind of virtualization concepts for data centers . They are Host OS based virtualization and Bare metal virtualization.

In Host OS based virtualization , A typical operating system is installed in top of your hardware and then the virtualization layer is installed and on top of that the virtual machines are created.

Bare metal virtualization is more efficient than this concept because on that the virtualization layer is directly installed on top of the hardware and the virtualization layer itself is acting the operating system and on top of that the virtual machines are installed.

The operating system installed on top of this virtual machines are called guest operating systems .

VMware is the world leader as far as the bare metal virtualization industry is concerned . The basic products like ESXI free edition can be implemented with out any license cost and can be used to explore the feasibility of virtualization in your company.

Naturally the enterprise class products comes with a price tag ( little bit high ) but really make sense if your environment that is mission critical .

The skilled man power for the Virtualization segment is low comparing with the demand of this fast expanding market . In addition to the normal infrastructure admin , this concepts creates a new post named virtualization admin which is a blend of the basic IT infra skills , storage skills and virtualization skills.

This article is an attempt to cover the very basic concepts of datacenter vitalization . More topics can be discussed based on the requirement from the persons like you . Please use the comment box to have a more detailed discussion on the same.

Find more details about the virtualization training session here